ETL pipeline with python and Spark

Data Science • June 8, 2025

ETL Pipeline Portfolio Project

Overview

You have been contracted to design and implement a data pipeline to retrieve regional air quality data from the Open Bristol Data Service, preprocess and transform the data in a required format and store it in both a PySpark DataFrame and a local PostgreSQL database.

The code should be clean, well written and well documented. Ensure functionality is encapsulated in functions so you can reuse them in other projects.

Tasks

1. Retrieve data from the API

Using Python's request module, retrieve data from the services’ api using their GeoJSON link. Consider implementing error handling techniques to mitigate issues with connecting to the API service.

2. Data Transformation

In anticipation of the loaded data eventually being used by Data Scientists and Analysts, the coordinates of each record need to be standardised to values between 0 and 1, this will mitigate the latitude values taking precedence over the longitude values in potential machine learning applications.

Create a function from first principles that normalises a given value between 0 and 1. Write at least two assertion tests for this function to ensure it works as intended before you run the full application.

Consider writing a flow chart for the function before you implement it to ensure the logic is sound.

Project Details

- Type: Data Science

- Date: June 8, 2025

-

Technologies:

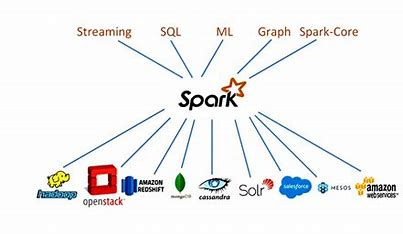

Python, Spark, postgres